Elasticsearch Series

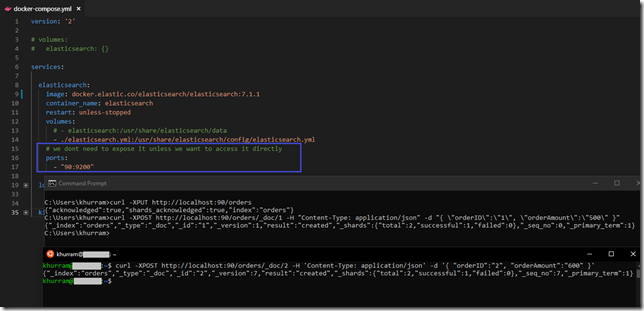

Kibana allows us to work with Elasticsearch in most productive way. From the previous post; lets expose our Elasticsearch server and using its REST APIs; create an “index” and a document in there directly using CURL

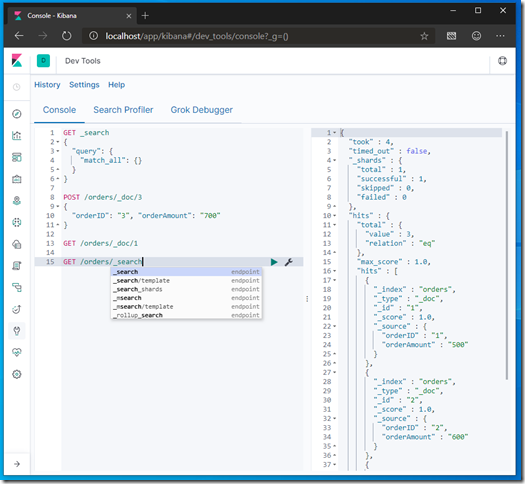

You will realize; its quite tedious to work with Elasticsearch this way; especially in Windows Command Prompt where you have to escape the quote character; this is where Dev Tools in Kibana can help us work better.

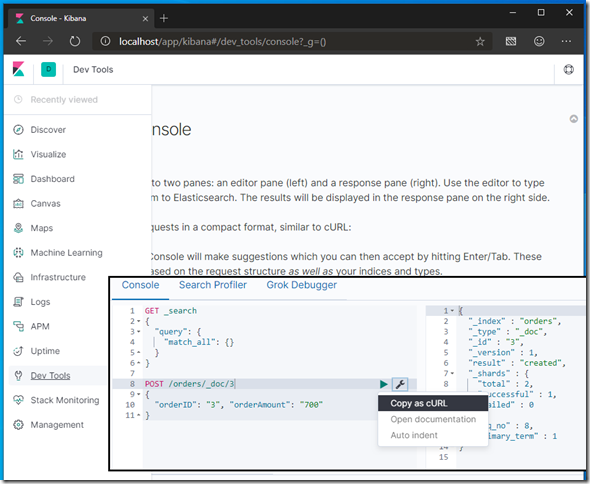

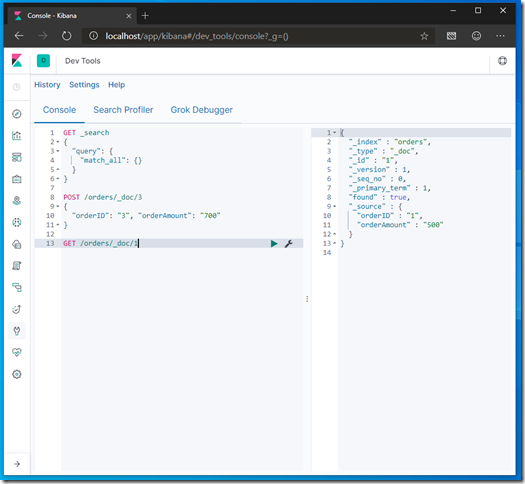

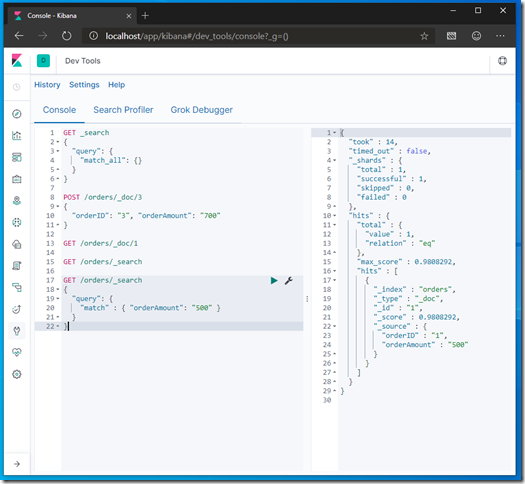

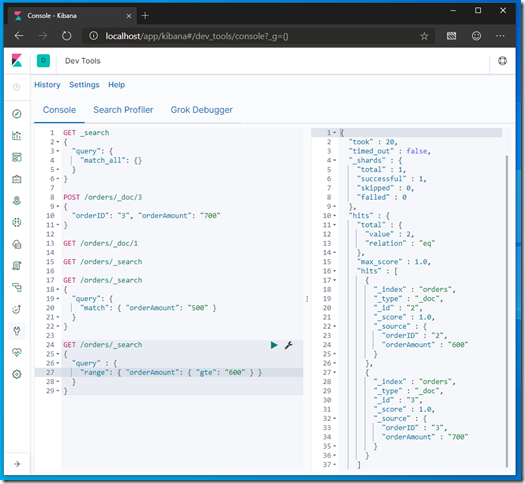

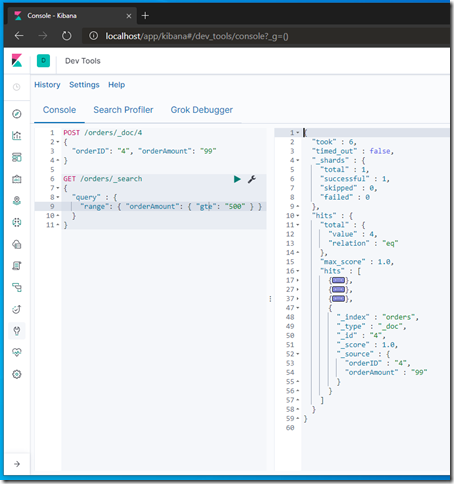

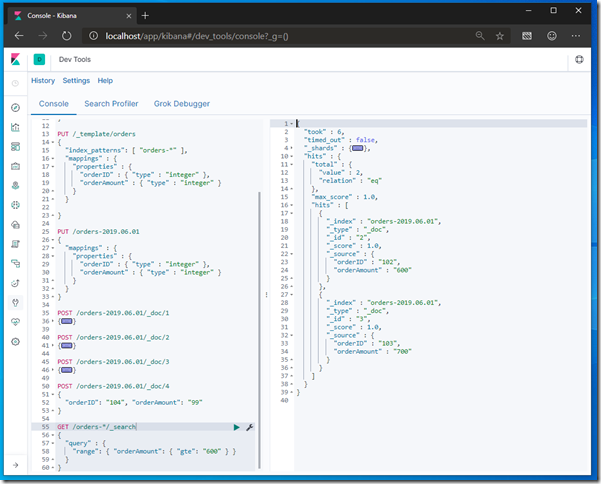

The Dev Tools has an option to copy the step as CURL command. Lets continue our API exploration by creating couple of more orders and then searching them

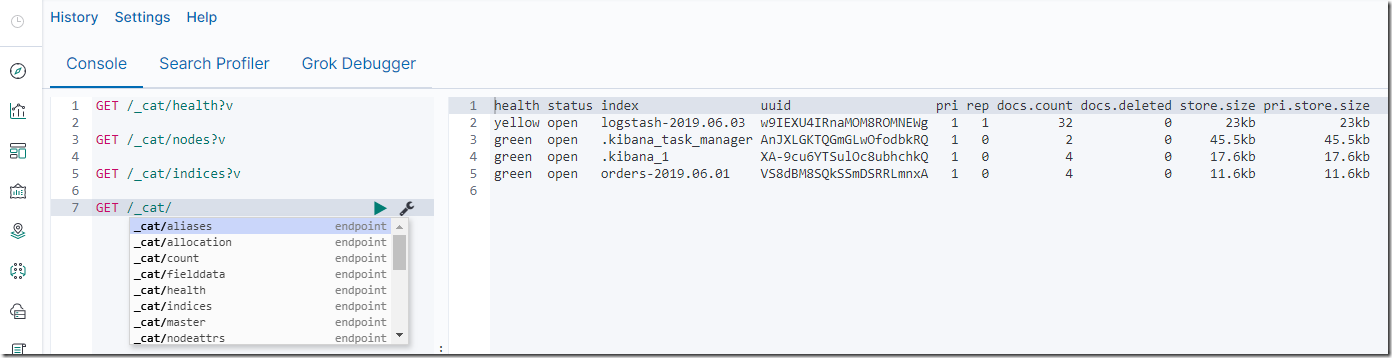

- Notice there are special endpoints under different VERBs; like _search in GET to query the data; also notice that Kibana auto completes and we can discover these special urls/endpoints

Elasticsearch provides a full Query Domain Specific Language based on JSON to define queries. The query consists of two types of clauses, Leaf query clauses and Compound query clauses. You can learn about this DSL @ https://www.elastic.co/guide/en/elasticsearch/reference/current/query-dsl.html

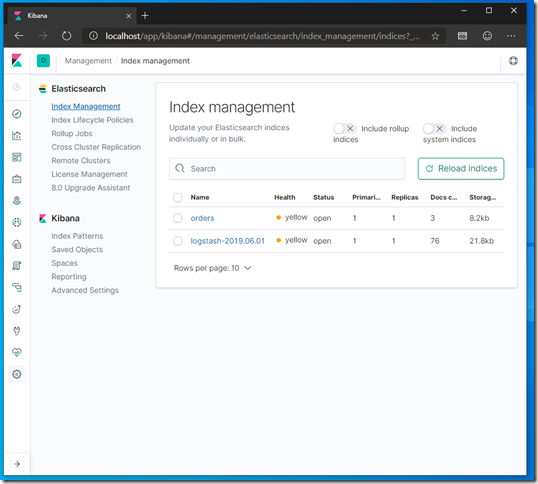

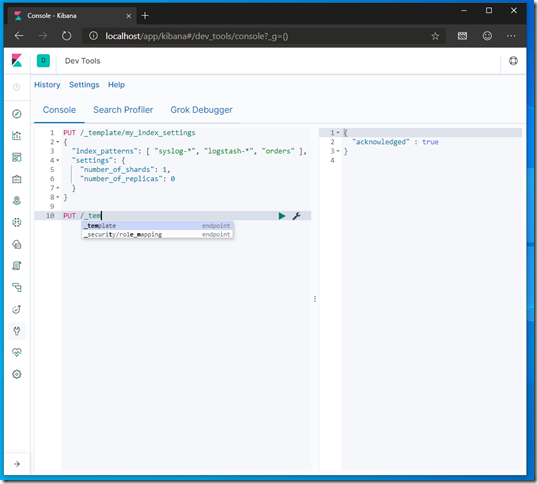

We also have the Management section; from where we can manage not only Kibana but also Elasticsearch, in recent versions they have improved this section a lot and have brought over many components from X-PACK (the Elastic.co’s commercial addon) to the free edition. You will notice that our indexes are not healthy; its because the default settings of the indexes dictates to have multiple shards (distributing data across many buckets) and to have replicas. Given we have a single node; we can change the index settings by putting our new index settings document under /_template having appropriate index_patterns

- With the above number_of_shards and number_of_replicas settings; if we delete the existing indexes (using Index Management) and recreate them; we should now see healthy / green indexes

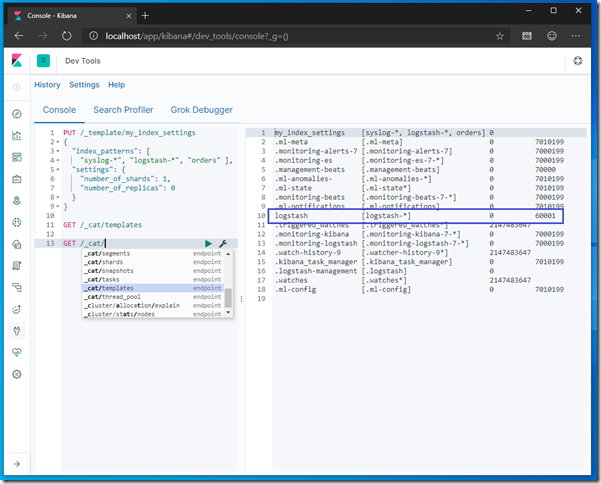

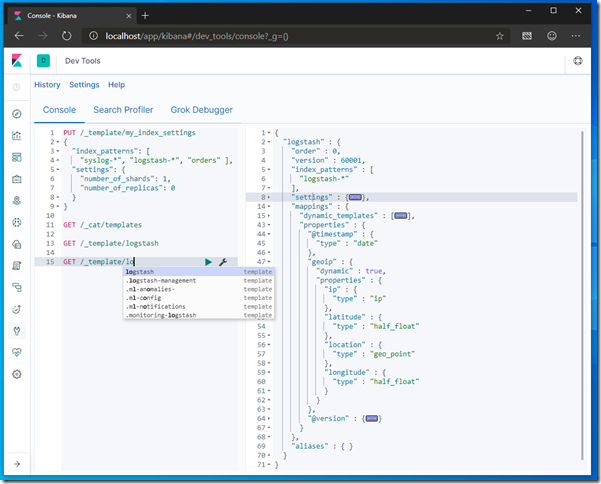

We can find the above created template and other templates using GET /_cat/templates endpoint. To view the selected template; use GET /_template/template-name endpoint. In previous post we used Logstash; it creates a template for its indexes as well; lets investigate this template.

- Notice the mappings section; and how geoip field is defined

In the previous post in which we used geoip plugin to transform the ufw_src_ip field to user friendly geolocation fields and drew them on the Map. It could only happen if we use logstash-* index names; if we want different index names; say ufw-; we can now add a template for ufw- pattern having this geoip field definition; and we should be able to use the Map Visualization with our custom ufw-* indexes

- As an exercise; go ahead and change index name from logstash-* to ufw-* in the work done in previous post

Our /orders index has an issue; the orderAmount field is not numeric data type and this becomes issue when using range queries; for instance if we search for orders having more than or equal to 500 amount; an order having amount 99 will also appear; because as string 99 => 500. To fix this issue; we can either use template having appropriate mappings section or we can use mappings when creating an index. If we have multiple indexes on some pattern; say orders-; we can search across them using GET /pattern/_search endpoint

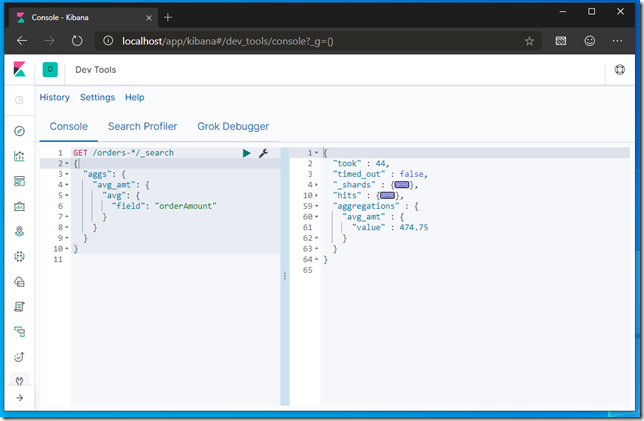

Given we now have the properly typed fields, we can now use Aggregations

- Elasticsearch has comprehensive Aggregation framework that we can use to build simple to complex data summaries; visit https://www.elastic.co/guide/en/elasticsearch/reference/current/search-aggregations.html for more details

There are some interesting endpoints under GET /_cat; for instance _cat/health for the cluster health; _cat/nodes for the nodes health in the cluster and _cat/indices for listing indexes along with their health. If JSON formatted data is not that readable; we can postfix these endpoints with ?v and it will give us the information in the plain text

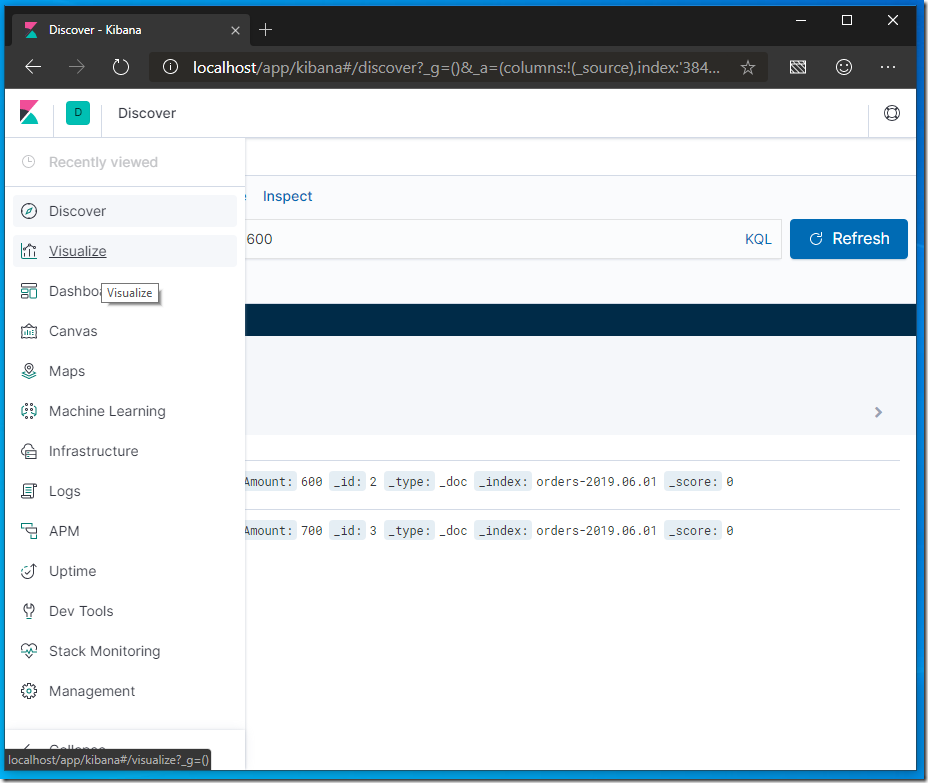

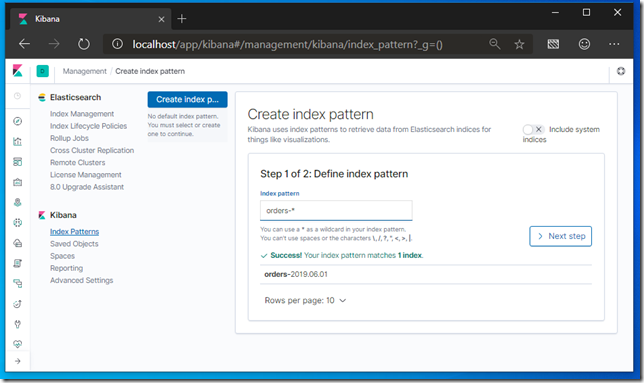

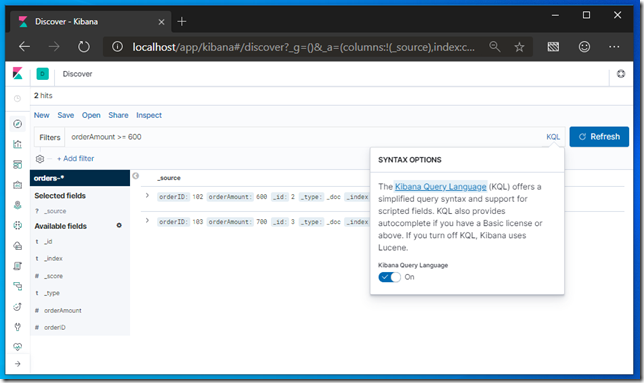

To use Kibana’s Discover and Visualize tools; we can define the Kibana Index pattern say orders- so it can include our orders-YYYY.MM.DD Elasticsearch indexes; and using Discover tool; we can view and use Kibana’s Query Language (KQL) which is introduced in recent versions of Kibana or Apache Lucene syntax to search / query our data

The queries used above are added into Elk folder @ https://github.com/khurram-aziz/HelloDocker

- Go ahead and try to use Visualization and Dashboard tools