Rancher Series

- Part 1: RancherOS

- Part 2: Rancher–First Application

- Part 3: Rancher Infrastructure Services

- Part 4: Floating IP and Containers

Lets deploy the first application on our Rancher Environment that we created in the first post. We will be deploying let’s Chat application; its an open source Slack clone built using NodeJS and MongoDB. There exists an official let’s Chat Docker Container so we have a quick clean start.

Lets deploy the first application on our Rancher Environment that we created in the first post. We will be deploying let’s Chat application; its an open source Slack clone built using NodeJS and MongoDB. There exists an official let’s Chat Docker Container so we have a quick clean start.

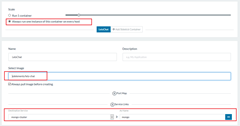

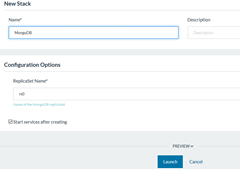

As we setup two hosts for our Rancher Environment earlier; we will try to deploy it in High Availability and Scalable configuration. Rancher has a catalog and from there we can deploy the MongoDB Stack. This particular Stack setups three clustered MongoDB containers with the specified replication set name. I went ahead and set it up with default values. There are few “sidekick” containers. Its Rancher specific and these are the containers “bound” to the primary container and these all always deployed + run as a unit; we can use this feature to setup volumes or run setup scripts for the primary container. There are two sidekicks for each Mongo Container in the catalog item.

On Launch; it will setup the required containers across our two hosts shortly; it downloads the Docker Images from the Docker Hub; for large containers setup may take a while depending on the network speed. It feel bizarre that Rancher has no built in option that image downloads are orchestrated as well and network resource is not wasted in multiple downloads of same image per host.

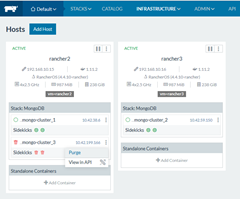

Once setup; we can scale up or down the MongoDB stack; I set it to two so I have one MongoDB container per host. We can view CPU/Memory and Network usage of the container and review the Sidekick containers from the interface. Knowing the docker image name of the sidekick we can review it on the Docker Hub and if we are lucky can find its source from there or by googling! This is a fantastic way to learn how the community has built up these Stacks; I encourage to go ahead and try other stacks as well!

Once setup; we can scale up or down the MongoDB stack; I set it to two so I have one MongoDB container per host. We can view CPU/Memory and Network usage of the container and review the Sidekick containers from the interface. Knowing the docker image name of the sidekick we can review it on the Docker Hub and if we are lucky can find its source from there or by googling! This is a fantastic way to learn how the community has built up these Stacks; I encourage to go ahead and try other stacks as well!

Rancher provides a modern User Interface to manage and orchestrate our Docker Containers; we can view our Hosts and the containers running on them; we can stop / start or restart the containers easily using the interface.

Given we scaled down the MongoDB stack; there is “an instance” of one stopped image; we can go ahead and Purge it

Now our MongoDB is running in the replication mode across two hosts; even if container goes down or one of the machine running these containers goes down; the MongoDB service will remain available.

Next we need to deploy let’s Chat Docker container in a similar way; notice I have specified to run one instance on each host so it gets deployed on all participating host; have specified their official Docker Image and has linked up our MongoDB cluster as “mongo” service; their image requirement.

We need to do one more step; if we run the letsChat container as is; it will give error failing to connect to our MongoDB cluster; luckily let’s Chat official container has option to set environment variables; and one of it is the Mongo connection string. For the cluster we need to specify the IP addresses of all the MongoDB nodes that we can learn about from Infrastructure page

Lets set LCB_DATABASE_URI environment variable for the letsChat container and give ips of all the MongoDB nodes in the connection string as per Mongoose requirement; in my case the connection string is mongodb://10.42.38.6:27017,10.42.59.150:27017/letschat

Lets set LCB_DATABASE_URI environment variable for the letsChat container and give ips of all the MongoDB nodes in the connection string as per Mongoose requirement; in my case the connection string is mongodb://10.42.38.6:27017,10.42.59.150:27017/letschat

Lets go ahead and deploy the container; it will download the image from Docker Hub and run an instance on all participating hosts shortly.

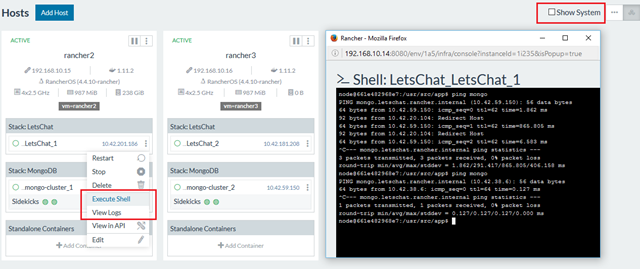

Rancher interface also has option to connect to the container’s shell or view their logs; using these; the system administrators or developers can debug and solve any issue.

We can connect to the shell and confirm that Rancher has made appropriate hosts entries for the Mongo Cluster.

We can connect to the shell and confirm that Rancher has made appropriate hosts entries for the Mongo Cluster.

If the web application is failing to connect to the MongoDB or giving errors; we can review them from View Logs and from the shell debug and fix it. For instance if we dont set the environment variable above for clustered mongodb; it will fail to run and view logs will have the Mongoose error!

- Note that Rancher and other similar products have similar features; being developers we should use standard output and error streams and emit appropriate logs that the system administrators can use. They will also appreciate if we provide utilities in the containers to troubleshoot or fix the issue on their own!

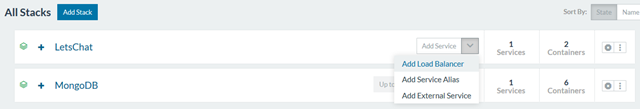

So now we have two instances of the web server containers and a clustered MongoDB; for the fail-over and scalability we need a “Load Balancer”. Rencher has built-in one and lets deploy an instance of it!

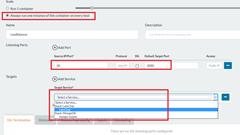

Lets use the same always run one instance scalability option for the Load Balancer; map service-container’s 8080 port (letsChat official container is running the app at 8080 port) and link up the service. Rancher’s Load Balancer is haProxy based and instead of making our onw, using their offering; we can link our web service and it will find (if we have just one container running) and load balance across multiple running containers of our web app seamlessly.

Lets use the same always run one instance scalability option for the Load Balancer; map service-container’s 8080 port (letsChat official container is running the app at 8080 port) and link up the service. Rancher’s Load Balancer is haProxy based and instead of making our onw, using their offering; we can link our web service and it will find (if we have just one container running) and load balance across multiple running containers of our web app seamlessly.

If we want to; we can further customize the haproxy configuration file; the user interface allows us to add configurations. For our case; nothing else required.

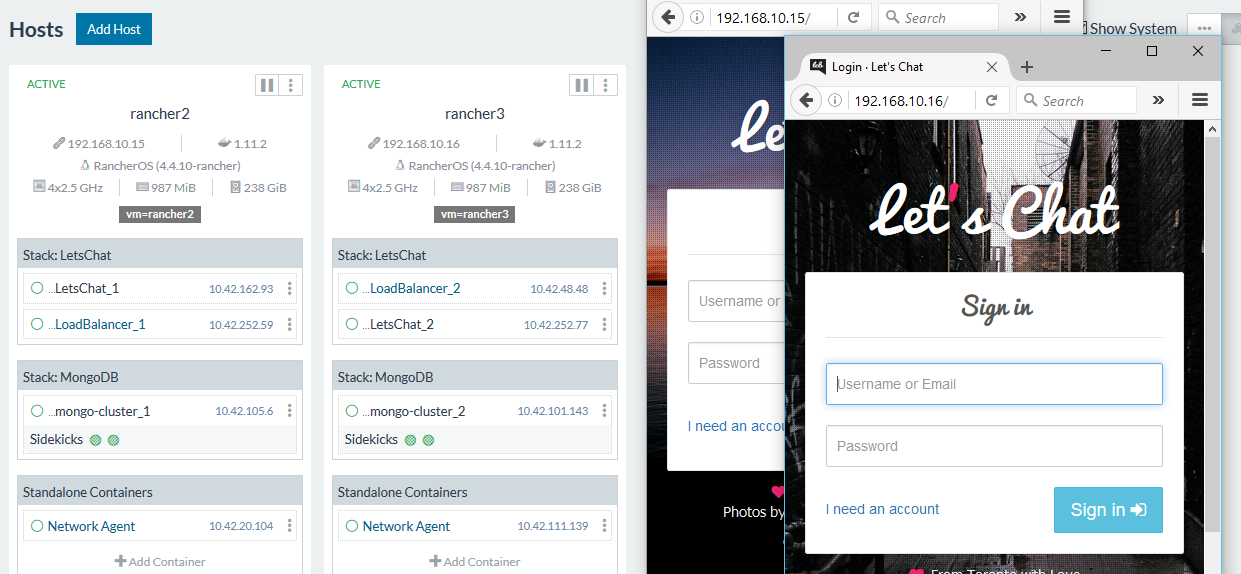

Now our stack is complete and we can access the application at the two IPs of our participating hosts!

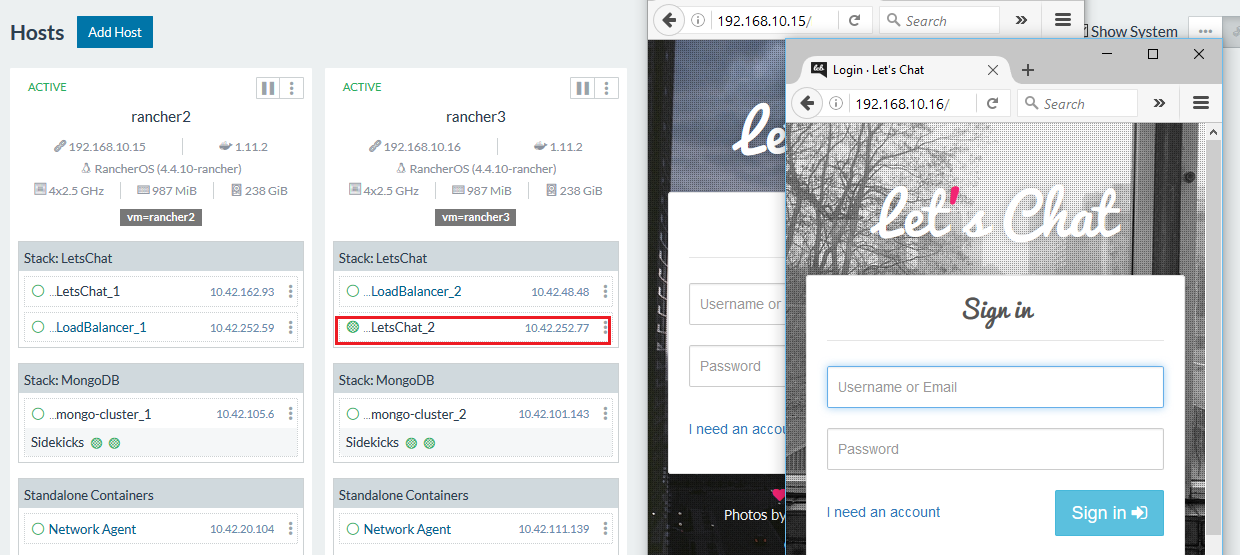

You might notice the IPs of the containers are changed; its because I went ahead and “Cloned” the containers (that Rancher supports; the quickest way to copy existing containers) setting environment variable accordingly; and while making new containers I choose not to restart the containers; the default configuration; so that I can stop few and simulate container failure. I am stopping one web server container; but I am still able to access my application

So we have a fairly complete fail-over and scale out implementation here, if MongoDB container or NodeJS container goes down things will continue to work, even if traffic is coming on one IP; load is distributed across all participating nodes. The only questions left are what if one of the Load Balancer goes down? And which IP to give in the DNS for our application? 192.168.10.15 (Rancher2 VM) or 192.168.10.16 (Rancher3 VM)? If we give both IPs; the traffic will load balance across these two machines; but what if whole host is brought down for maintenance? We will figure it out in the next post!